Data Science And Machine Learning With Python Hands On

Tags: Machine Learning

Complete hands-on machine learning tutorial with data science, Tensorflow, artificial intelligence, and neural networks

Last updated 2022-01-10 | 4.6

- Build artificial neural networks with Tensorflow and Keras- Classify images

- data

- and sentiments using deep learning

- Make predictions using linear regression

- polynomial regression

- and multivariate regression

What you'll learn

* Requirements

* You'll need a desktop computer (Windows* Mac

* or Linux) capable of running Anaconda 3 or newer. The course will walk you through installing the necessary free software.

* Some prior coding or scripting experience is required.

* At least high school level math skills will be required.

Description

- Build artificial neural networks with Tensorflow and Keras

- Classify images, data, and sentiments using deep learning

- Make predictions using linear regression, polynomial regression, and multivariate regression

- Data Visualization with MatPlotLib and Seaborn

- Implement machine learning at massive scale with Apache Spark's MLLib

- Understand reinforcement learning - and how to build a Pac-Man bot

- Classify data using K-Means clustering, Support Vector Machines (SVM), KNN, Decision Trees, Naive Bayes, and PCA

- Use train/test and K-Fold cross validation to choose and tune your models

- Build a movie recommender system using item-based and user-based collaborative filtering

- Clean your input data to remove outliers

- Design and evaluate A/B tests using T-Tests and P-Values

Course content

13 sections • 116 lectures

Introduction Preview 02:41

What to expect in this course, who it's for, and the general format we'll follow.

Udemy 101: Getting the Most From This Course Preview 02:10

Installation: Getting Started Preview 00:39

[Activity] WINDOWS: Installing and Using Anaconda & Course Materials Preview 12:37

[Activity] MAC: Installing and Using Anaconda & Course Materials Preview 10:02

[Activity] LINUX: Installing and Using Anaconda & Course Materials Preview 10:57

Python Basics, Part 1 [Optional] Preview 04:59

In a crash course on Python and what's different about it, we'll cover the importance of whitespace in Python scripts, and how to import Python modules.

[Activity] Python Basics, Part 2 [Optional] Preview 05:17

In part 2 of our Python crash course, we'll cover Python data structures including lists, tuples, and dictionaries.

[Activity] Python Basics, Part 3 [Optional] Preview 02:46

In this lesson, we'll see how functions work in Python.

[Activity] Python Basics, Part 4 [Optional] Preview 04:02

We'll wrap up our Python crash course covering Boolean expressions and looping constructs.

Introducing the Pandas Library [Optional] Preview 10:08

Pandas is a library we'll use throughout the course for loading, examining, and manipulating data. Let's see how it works with some examples, and you'll have an exercise at the end too.

Types of Data (Numerical, Categorical, Ordinal) Preview 06:58

We cover the differences between continuous and discrete numerical data, categorical data, and ordinal data.

Mean, Median, Mode Preview 05:26

A refresher on mean, median, and mode - and when it's appropriate to use each.

[Activity] Using mean, median, and mode in Python Preview 08:21

We'll use mean, median, and mode in some real Python code, and set you loose to write some code of your own.

[Activity] Variation and Standard Deviation Preview 11:12

We'll cover how to compute the variation and standard deviation of a data distribution, and how to do it using some examples in Python.

Probability Density Function; Probability Mass Function Preview 03:27

Introducing the concepts of probability density functions (PDF's) and probability mass functions (PMF's).

Common Data Distributions (Normal, Binomial, Poisson, etc) Preview 07:45

We'll show examples of continuous, normal, exponential, binomial, and poisson distributions using iPython.

[Activity] Percentiles and Moments Preview 12:33

We'll look at some examples of percentiles and quartiles in data distributions, and then move on to the concept of the first four moments of data sets.

[Activity] A Crash Course in matplotlib Preview 13:46

An overview of different tricks in matplotlib for creating graphs of your data, using different graph types and styles.

[Activity] Advanced Visualization with Seaborn Preview 17:30

[Activity] Covariance and Correlation Preview 11:31

The concepts of covariance and correlation used to look for relationships between different sets of attributes, and some examples in Python.

[Exercise] Conditional Probability Preview 16:04

We cover the concepts and equations behind conditional probability, and use it to try and find a relationship between age and purchases in some fabricated data using Python.

Exercise Solution: Conditional Probability of Purchase by Age Preview 02:20

Here we'll go over my solution to the exercise I challenged you with in the previous lecture - changing our fabricated data to have no real correlation between ages and purchases, and seeing if you can detect that using conditional probability.

Bayes' Theorem Preview 05:23

An overview of Bayes' Theorem, and an example of using it to uncover misleading statistics surrounding the accuracy of drug testing.

[Activity] Linear Regression Preview 11:01

We introduce the concept of linear regression and how it works, and use it to fit a line to some sample data using Python.

[Activity] Polynomial Regression Preview 08:04

We cover the concepts of polynomial regression, and use it to fit a more complex page speed - purchase relationship in Python.

[Activity] Multiple Regression, and Predicting Car Prices Preview 16:26

Multivariate models let us predict some value given more than one attribute. We cover the concept, then use it to build a model in Python to predict car prices based on their number of doors, mileage, and number of cylinders. We'll also get our first look at the statsmodels library in Python.

Multi-Level Models Preview 04:36

We'll just cover the concept of multi-level modeling, as it is a very advanced topic. But you'll get the ideas and challenges behind it.

Supervised vs. Unsupervised Learning, and Train/Test Preview 08:57

The concepts of supervised and unsupervised machine learning, and how to evaluate the ability of a machine learning model to predict new values using the train/test technique.

[Activity] Using Train/Test to Prevent Overfitting a Polynomial Regression Preview 05:47

We'll apply train test to a real example using Python.

Bayesian Methods: Concepts Preview 03:59

We'll introduce the concept of Naive Bayes and how we might apply it to the problem of building a spam classifier.

[Activity] Implementing a Spam Classifier with Naive Bayes Preview 08:05

We'll actually write a working spam classifier, using real email training data and a surprisingly small amount of code!

K-Means Clustering Preview 07:23

K-Means is a way to identify things that are similar to each other. It's a case of unsupervised learning, which could result in clusters you never expected!

[Activity] Clustering people based on income and age Preview 05:14

We'll apply K-Means clustering to find interesting groupings of people based on their age and income.

Measuring Entropy Preview 03:09

Entropy is a measure of the disorder in a data set - we'll learn what that means, and how to compute it mathematically.

[Activity] WINDOWS: Installing Graphviz Preview 00:22

[Activity] MAC: Installing Graphviz Preview 01:16

[Activity] LINUX: Installing Graphviz Preview 00:54

Decision Trees: Concepts Preview 08:43

Decision trees can automatically create a flow chart for making some decision, based on machine learning! Let's learn how they work.

[Activity] Decision Trees: Predicting Hiring Decisions Preview 09:47

We'll create a decision tree and an entire "random forest" to predict hiring decisions for job candidates.

Ensemble Learning Preview 05:59

Random Forests was an example of ensemble learning; we'll cover over techniques for combining the results of many models to create a better result than any one could produce on its own.

[Activity] XGBoost Preview 15:29

XGBoost is perhaps the most powerful machine learning algorithm today, and it's really easy to use. We'll cover how it works, how to tune it, and run an example on the Iris data set showing how powerful XGBoost is.

Support Vector Machines (SVM) Overview Preview 04:27

Support Vector Machines are an advanced technique for classifying data that has multiple features. It treats those features as dimensions, and partitions this higher-dimensional space using "support vectors."

[Activity] Using SVM to cluster people using scikit-learn Preview 09:29

We'll use scikit-learn to easily classify people using a C-Support Vector Classifier.

User-Based Collaborative Filtering Preview 07:57

One way to recommend items is to look for other people similar to you based on their behavior, and recommend stuff they liked that you haven't seen yet.

Item-Based Collaborative Filtering Preview 08:15

The shortcomings of user-based collaborative filtering can be solved by flipping it on its head, and instead looking at relationships between items instead of relationships between people.

[Activity] Finding Movie Similarities using Cosine Similarity Preview 09:08

We'll use the real-world MovieLens data set of movie ratings to take a first crack at finding movies that are similar to each other, which is the first step in item-based collaborative filtering.

[Activity] Improving the Results of Movie Similarities Preview 07:59

Our initial results for movies similar to Star Wars weren't very good. Let's figure out why, and fix it.

[Activity] Making Movie Recommendations with Item-Based Collaborative Filtering Preview 10:22

We'll implement a complete item-based collaborative filtering system that uses real-world movie ratings data to recommend movies to any user.

[Exercise] Improve the recommender's results Preview 05:29

As a student exercise, try some of my ideas - or some ideas of your own - to make the results of our item-based collaborative filter even better.

K-Nearest-Neighbors: Concepts Preview 03:44

KNN is a very simple supervised machine learning technique; we'll quickly cover the concept here.

[Activity] Using KNN to predict a rating for a movie Preview 12:29

We'll use the simple KNN technique and apply it to a more complicated problem: finding the most similar movies to a given movie just given its genre and rating information, and then using those "nearest neighbors" to predict the movie's rating.

Dimensionality Reduction; Principal Component Analysis (PCA) Preview 05:44

Data that includes many features or many different vectors can be thought of as having many dimensions. Often it's useful to reduce those dimensions down to something more easily visualized, for compression, or to just distill the most important information from a data set (that is, information that contributes the most to the data's variance.) Principal Component Analysis and Singular Value Decomposition do that.

[Activity] PCA Example with the Iris data set Preview 09:05

We'll use sckikit-learn's built-in PCA system to reduce the 4-dimensions Iris data set down to 2 dimensions, while still preserving most of its variance.

Data Warehousing Overview: ETL and ELT Preview 09:05

Cloud-based data storage and analysis systems like Hadoop, Hive, Spark, and MapReduce are turning the field of data warehousing on its head. Instead of extracting, transforming, and then loading data into a data warehouse, the transformation step is now more efficiently done using a cluster after it's already been loaded. With computing and storage resources so cheap, this new approach now makes sense.

Reinforcement Learning Preview 12:44

We'll describe the concept of reinforcement learning - including Markov Decision Processes, Q-Learning, and Dynamic Programming - all using a simple example of developing an intelligent Pac-Man.

[Activity] Reinforcement Learning & Q-Learning with Gym Preview 12:56

Understanding a Confusion Matrix Preview 05:17

What's a confusion matrix, and how do I read it?

Measuring Classifiers (Precision, Recall, F1, ROC, AUC) Preview 06:35

Bias/Variance Tradeoff Preview 06:15

Bias and Variance both contribute to overall error; understand these components of error and how they relate to each other.

[Activity] K-Fold Cross-Validation to avoid overfitting Preview 10:26

We'll introduce the concept of K-Fold Cross-Validation to make train/test even more robust, and apply it to a real model.

Data Cleaning and Normalization Preview 07:10

Cleaning your raw input data is often the most important, and time-consuming, part of your job as a data scientist!

[Activity] Cleaning web log data Preview 10:56

In this example, we'll try to find the top-viewed web pages on a web site - and see how much data pollution makes that into a very difficult task!

Normalizing numerical data Preview 03:22

A brief reminder: some models require input data to be normalized, or within the same range, of each other. Always read the documentation on the techniques you are using.

[Activity] Detecting outliers Preview 06:21

A review of how outliers can affect your results, and how to identify and deal with them in a principled manner.

Feature Engineering and the Curse of Dimensionality Preview 06:03

Imputation Techniques for Missing Data Preview 07:48

Handling Unbalanced Data: Oversampling, Undersampling, and SMOTE Preview 05:35

Binning, Transforming, Encoding, Scaling, and Shuffling Preview 07:51

Warning about Java 11 and Spark 3! Preview 00:21

Spark installation notes for MacOS and Linux users Preview 01:28

[Activity] Installing Spark - Part 1 Preview 06:59

We'll present an overview of the steps needed to install Apache Spark on your desktop in standalone mode, and get started by getting a Java Development Kit installed on your system.

[Activity] Installing Spark - Part 2 Preview 07:20

We'll install Spark itself, along with all the associated environment variables and ancillary files and settings needed for it to function properly.

Spark Introduction Preview 09:10

A high-level overview of Apache Spark, what it is, and how it works.

Spark and the Resilient Distributed Dataset (RDD) Preview 11:42

We'll go in more depth on the core of Spark - the RDD object, and what you can do with it.

Introducing MLLib Preview 05:09

A quick overview of MLLib's capabilities, and the new data types it introduces to Spark.

Introduction to Decision Trees in Spark Preview 16:15

We'll walk through an example of coding up and running a decision tree using Apache Spark's MLLib! In this exercise, we try to predict if a job candidate will be hired based on their work and educational history, using a decision tree that can be distributed across an entire cluster with Spark.

[Activity] K-Means Clustering in Spark Preview 11:23

We'll take the same example of clustering people by age and income from our earlier K-Means lecture - but solve it in Spark!

TF / IDF Preview 06:44

We'll introduce the concept of TF-IDF (Term Frequency / Inverse Document Frequency) and how it applies to search problems, in preparation for using it with MLLib.

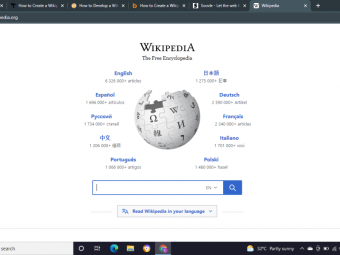

[Activity] Searching Wikipedia with Spark Preview 08:21

Let's use TF-IDF, Spark, and MLLib to create a rudimentary search engine for real Wikipedia pages!

[Activity] Using the Spark 2.0 DataFrame API for MLLib Preview 08:07

Spark 2.0 introduced a new API for MLLib based on DataFrame objects; we'll look at an example of using this to create and use a linear regression model.

Deploying Models to Real-Time Systems Preview 08:42

High-level thoughts on various ways to deploy your trained models to production systems including apps and websites.

A/B Testing Concepts Preview 08:23

Running controlled experiments on your website usually involves a technique called the A/B test. We'll learn how they work.

T-Tests and P-Values Preview 05:59

How to determine significance of an A/B tests results, and measure the probability of the results being just from random chance, using T-Tests, the T-statistic, and the P-value.

[Activity] Hands-on With T-Tests Preview 06:04

We'll fabricate A/B test data from several scenarios, and measure the T-statistic and P-Value for each using Python.

Determining How Long to Run an Experiment Preview 03:24

Some A/B tests just don't affect customer behavior one way or another. How do you know how long to let an experiment run for before giving up?

A/B Test Gotchas Preview 09:26

There are many limitations associated with running short-term A/B tests - novelty effects, seasonal effects, and more can lead you to the wrong decisions. We'll discuss the forces that may result in misleading A/B test results so you can watch out for them.

Deep Learning Pre-Requisites Preview 11:43

If you skipped ahead, I'll show you where to get the course materials for just this section. And we'll cover some pre-requisite concepts for understanding how neural networks operate: gradient descent, autodiff, and softmax.

The History of Artificial Neural Networks Preview 11:14

We'll cover the evolution of artificial neural networks from 1943 to modern-day architectures, which is a great way to understand how they work.

[Activity] Deep Learning in the Tensorflow Playground Preview 12:00

Google's Tensorflow Playground lets you experiment with deep neural networks and understand them - without writing a line of code!

Deep Learning Details Preview 09:29

Let's dive into the details on how modern multi-level perceptrons are trained and tuned.

Introducing Tensorflow Preview 11:29

We'll cover Google's open-source Tensorflow Python library, and see how it can help you create and train neural networks.

Important note about Tensorflow 2 Preview 00:23

[Activity] Using Tensorflow, Part 1 Preview 13:11

We'll use Tensorflow to create a neural network that classifies handwritten numerals from the MNIST data set. Part 1 of 2.

[Activity] Using Tensorflow, Part 2 Preview 12:03

We'll use Tensorflow to create a neural network that classifies handwritten numerals from the MNIST data set. Part 2 of 2.

[Activity] Introducing Keras Preview 13:33

The Tensorflow 1.9 offers a higher-level API called Keras, and makes it easier to construct your neural networks. We'll use Keras to solve the same handwriting recognition problem - but with much less code.

[Activity] Using Keras to Predict Political Affiliations Preview 12:05

As another hands-on example, we'll use Keras to build a neural network that learns how to determine if a politician is Republican on Democrat just based on their votes.

Convolutional Neural Networks (CNN's) Preview 11:28

CNN's mimic your visual cortex, and can find features in one, two, or three-dimensional data even if you're not sure where exactly that feature is.

[Activity] Using CNN's for handwriting recognition Preview 08:02

CNN's are better suited to image data, and we'll prove that by using a CNN in Keras on the MNIST data.

Recurrent Neural Networks (RNN's) Preview 11:02

RNN's can handle sequences of data, like events over time or words in a sentence. Learn what's different about how they work, how they are trained, and ways to optimize them.

[Activity] Using a RNN for sentiment analysis Preview 09:37

Let's implement a RNN in Keras to determine positive or negative sentiments for real movie reviews from IMDb!

[Activity] Transfer Learning Preview 12:14

We'll see how transfer learning makes it trivially easy to use pre-trained models for common AI tasks.

Tuning Neural Networks: Learning Rate and Batch Size Hyperparameters Preview 04:39

Deep Learning Regularization with Dropout and Early Stopping Preview 06:21

The Ethics of Deep Learning Preview 11:02

As with any new technology, sometimes we can become overzealous in how we use it. A few cautionary tales to make sure your deep learning work does more good than harm.

Variational Auto-Encoders (VAE's) - how they work Preview 10:23

We'll show how Variational Auto-Encoders (VAE's) pair and encoder and a decoder in a neural network to create latent vectors that can be used to reconstruct synthetic images in an artificial neural network.

Variational Auto-Encoders (VAE) - Hands-on with Fashion MNIST Preview 26:31

We'll walk through a Jupyter Notebook illustrating a Variational Auto-Encoder trained on the Fashion MNIST data set. When done, we will generate synthetic images of articles of clothing, and see how VAE's can also be used for unsupervised learning.

Generative Adversarial Networks (GAN's) - How they work Preview 07:39

We'll learn how Generative Adversarial Networks (GAN's) pair an encoder fed random data with a discriminator that it fights against. This type of network can be used to generate random synthetic images of faces, synthetic music, synthetic data sets... whatever you can dream up. And yes, it's the tech behind "deepfakes" and all those viral face-swapping and aging apps.

Generative Adversarial Networks (GAN's) - Playing with some demos Preview 11:22

To get a better feel of how GAN's are trained, we'll watch a simple model of 2D points learn over time, train a GAN to create synthetic handwritten numbers, and make a synthetic painting using AI and GAN's.

Generative Adversarial Networks (GAN's) - Hands-on with Fashion MNIST Preview 15:20

We'll dive into an actual notebook you can run and experiment with, that trains a GAN to fabricate synthetic random images of clothing!

Learning More about Deep Learning Preview 01:44

Some suggested resources for continuing your education on deep learning, artificial intelligence, and neural networks.

Your final project assignment: Mammogram Classification Preview 06:19

It's time to apply what you've learned in this course! You'll be given a real data set of mammogram masses, and training data on whether these masses were determined to be benign or malignant. Apply the different machine learning techniques you've used in this course to find the best one for automating the diagnosis for these masses.

Final project review Preview 10:26

I'll walk you through my own solution to your final project, so you can compare your results against mine.

More to Explore Preview 02:59

Where to go from here - recommendations for books, websites, and career advice to get you into the data science job you want.

Don't Forget to Leave a Rating! Preview 00:23

If you enjoyed this course, please leave a star rating for it!

This course includes:

This course includes:

![Flutter & Dart - The Complete Guide [2022 Edition]](https://img-c.udemycdn.com/course/100x100/1708340_7108_5.jpg)